Rust is a great language. It gives you a lot of control over memory layout of objects and allocations, but enforces that your use of those objects’ lifetimes are managed correctly via its borrow checking system: if you take a pointer to an object, you have to statically prove to the compiler that the pointer can never outlive the object.

In the majority of cases, this is approximately trivial: you create some objects, get pointers to them and do some set of operations, complete the operations and all the pointers go away, and then destroy all the objects. More complex programs have problems, however, especially if the answer to the question “who is in charge of destroying this object?” doesn’t have a great answer, and requires a bit more work.

C++ has a shared_ptr<T> type for a reference counted pointer to an object. Every time you copy the shared_ptr, it increment a counter; every time you destruct a shared_ptr, you decrement the counter. Once the counter hits zero, you can destroy the object the shared_ptr is pointing to because you know you were the last person that has access to the object, and can lock the door on your way out.

Rust has the same thing in its Rc<T> and Arc<T> types (with Rc being “reference counted” and Arc being “atomically reference counted” - Arc is safe to share between threads, with the count increment/decrement using atomic operations). The difference is that C++ doesn’t fix all memory safety bugs with shared_ptr: dereferencing the shared_ptr gives you a raw pointer, which you can erroneously hold past destroying the shared_ptr it came from, which may destroy the object. Rust’s Rc smartpointers instead give you a borrow to its contents, and thus are guaranteed by the compiler not to outlive the object they’re borrowing from (i.e. the Rc).

Rust also prevents data races through its Arc type: shared_ptr is like Arc in that incrementing and decrementing the count across different threads that are pointing to the same object are safe for both of them, but shared_ptr is still vulnerable to data races, for example if two threads are attempting to write to the object at the same time, or one is writing while another is reading the same memory location. Rust only gives you a read-only pointer to the contents of an Rc or Arc pointer - in order to mutate an object behind an Arc, you need to use one of Rust’s “interior mutability” types, such as RefCell<T>, which does a dynamic check that there is either several read-only pointers to the object, or one read-write pointer, but not both, or else it will panic and crash the program. Usually Rust enforces the read xor write access invariant using its borrow checker system, but because you have non-trivial ownership for objects you can’t use the borrow checker, and have to have those checks at runtime instead of compile time.

This is all well and good. With shared_ptrs or Arc you can make big tangled data structures all pointing to one another. You can make a doubly-linked list, or a graph with edge that are pointers to nodes, or a red-black tree with “parent” links. The problem is that it will leak memory, and eventually your program will get very slow and then die. What went wrong?

Cycles

shared_ptr and Arc have the same problem: they have a counter, which they maintain as the number of existent pointers to this object. If you have a cycle of objects like A<->B, where A and B are two different objects but both have pointers to eachother, then both have a count of 1 and thus can’t be deleted, because they’re still accessible from something. If your program has destroyed every other reference to A or B, so they are unreachable except through the internal pointers to eachother, than they can safely be destroyed! They’re garbage, because there is no possible (correct) way for your program to access that memory. But they won’t be, because the reference counting isn’t smart enough to know the difference between pointers that are reachable and unreachable, and just sees that both objects have a reference-counted pointer somewhere and can’t be destroyed yet.

This is a pretty bad problem to have. The normal solution for this in Rust or C++ is to use an arena allocator, where you allocate a memory region upfront, and then can have objects within that region that can point to other arena objects, and then at the end you free the entire arena. This isn’t a great solution, since you are essentially still leaking memory, it’s just eventually you will free that leaked memory when you teardown the entire arena, and not any time sooner. You can also use a real garbage collector, like shredder or the boehm-demers-weiser GC. These will occasionally do a scan of all reachable objects, freeing everything else - they don’t care about cycles, because if the cycles of objects aren’t reachable from any threads they won’t be marked as reachable, and thus freed because they don’t keep precise reference counts. You can really think of an arena allocator as a bad version of a semi-space garbage collector, where you can only ever do one collection and it always frees everything: this is obviously still useful in that it guarantees that all your memory is reclaimed, but has obvious downsides.

My problem with shredder, or rust-gc, or any of the several other Rust garbage collector libraries is that they’re complex. A lot of them have novel schemes to make sure that your program maintains a set of initial reachable objects (“roots”) correctly at all times, since if it doesn’t you will incorrectly free memory and crash (or worse), and don’t have very high confidence that they are managing those roots correctly and thus have warnings about not being production ready. All(?) of them also have stop-the-world phases where they have to pause every running thread in your process in order to scan all the roots from a coherent view (or don’t support multithreading at all!).

Collection

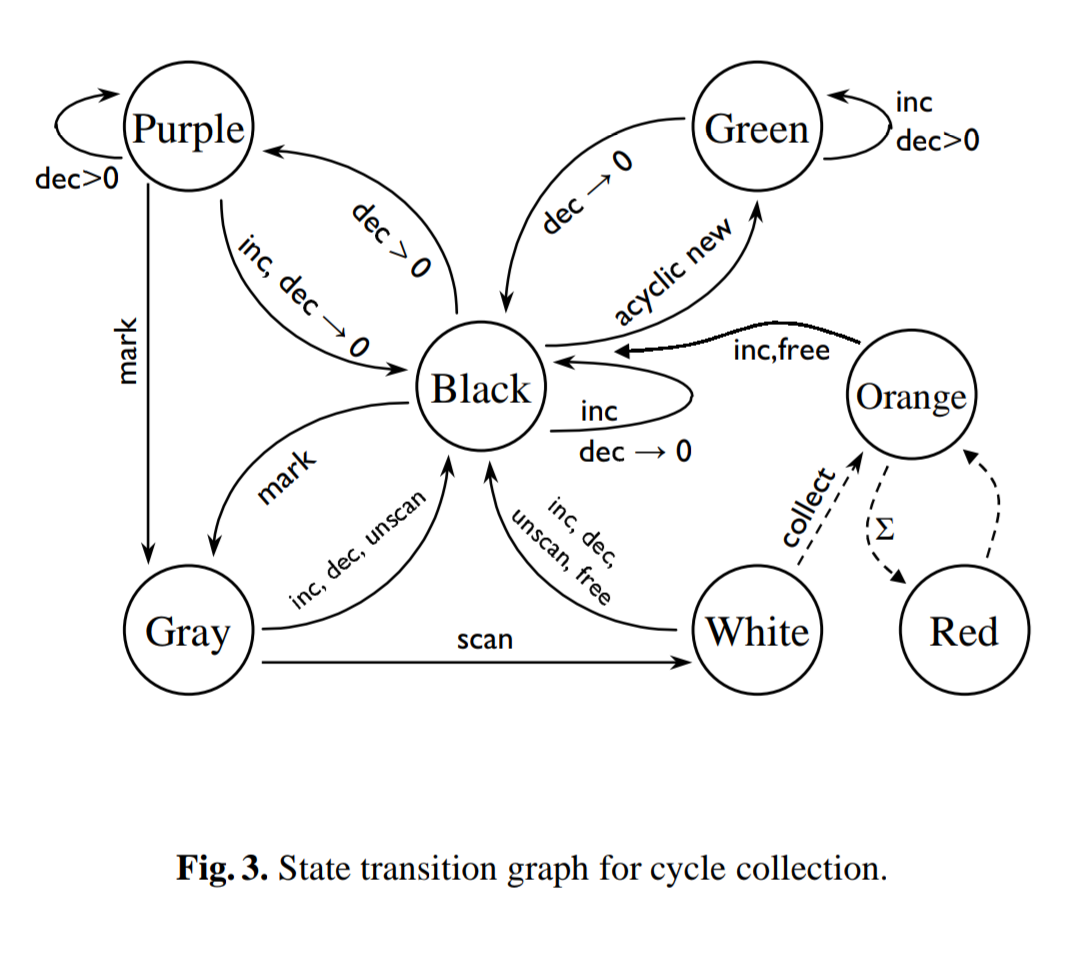

Turns out there’s a neat variant of garbage collection called cycle collection. I was ruminating on how complex all the Rust garbage collectors were a few months ago, and how there isn’t just an Arc-but-doesnt-leak replacement smartpointer you can drop-in to programs that need it, and my addled brain dredged up half-remembered thoughts of when I read Concurrent Cycle Collection in Reference Counted Systems, 2002 a few years before. I then brashly and confidently tweeted about how you could make a very simple Arc-but-doesnt-leak replacement smartpointer library in Rust, and banged out a design based on the general principals I remembered. And then I actually re-read the paper and realized it had object color state transition diagrams that look like this.  Which I had, by pure coincidence, been using as the punchline for jokes about how complex concurrent garbage collection systems since, uh, probably the same several years timespan since I last read the paper. Woops.

Which I had, by pure coincidence, been using as the punchline for jokes about how complex concurrent garbage collection systems since, uh, probably the same several years timespan since I last read the paper. Woops.

The basic idea with the cycle collection scheme is that by default, objects are the same as reference-counting. The difference is that when your count is decremented to a number other than 0, you add the object to a list, and then when doing a garbage collection you scan all the objects in your list (recursively visiting all objects reachable from each object) to see if any of them form graphs of objects where all the objects’ references are accounted for with only internal pointers to eachother. The idea is that in order to form a cycle, you have to have a mutator thread:

- Create an object A

- Create an object B

- Increment A’s refcount and create A2, and set B.ptr = A2

- Set A.ptr = B (the original B)

- Destroy A, which is the last external pointer into the cycle of objects.

Cycle collection handles this by making the “destroy A” step, that is the point where you make a group of objects unreachable, instead essentially mark the objects as potentially unreachable. The scan step then finds all objects that are unreachable, because you know none of the objects in some graph have external pointers, and thus nothing can acquire a new pointer to any of them, and not unreachable, because your scan didn’t find all the pointers, and thus something else by definition has at least one pointer and reference count.

The nice thing about this is that, in the best-case of all your objects just being created with a reference count of 1, and then being used a bit and then decremented from 1->0, objects are just the same as Arc and freed immediately, with no extra work being needed. Even if the Arc’s count was incremented and then decremented twice instead (1->2->1->0) you can still immediately free the object: you know you had the last reference to the object, and thus it can’t possibly be leaked via cycle, and so you can just remove the object from the list and then free it immediately. The recursive scan is only ever needed to free objects that were actually leaking memory via cycles.

We visit objects in order to count how many pointers to them we’re able to find, because if we can find an equal number of pointers to an object as it’s reference count then we know the mutator doesn’t have any pointers, and if we find a disconnected group of those objects then we can free all of them at once since they’re collectively unreachable.

There’s a bunch of complex stuff that you have to handle in order to have correct visiting in the face of concurrrency, unfortunately - if you’re scanning a graph of objects, by the time you scan the last object that first object’s count may have changed. We use a scheme where objects have a bitmap of flags, and are marked VISITED when we first see it, and then if the object is decremented while VISITED it is transitioned to DIRTY. When checking to see if an object is leaked or not we can then also see if it is DIRTY or not, where we know that a mutator had a live reference to the object, and thus it and all reachable objects can be marked not leaked. The object is queued to be added to the next collection’s list instead (since the decrement may have been for the last external reference).

Additionally, consider what happens if we visit struct Foo { a: Mutex<Option<Gc<Bar>>>, b: Mutex<Option<Gc<Bar>>> } concurrently with a mutator swapping the contents of foo.a and foo.b - we could count a as one pointer to whatever it is referencing, and then the mutator swaps the field, and then we could count b as a second pointer to…the exact same object as before, accidentally double-counting the first pointer’s object and under-counting the second’s, which would be very bad. The collector normally takes a read-lock on the Gc<T> objects while it scans them, which either fails if object has an outstanding &mut-reference from a mutator (in which case we obviously know it’s still reachable from something) or succeeds and blocks mutators from acquiring new &mut-references which are required to swap around fields of objects. The entire point of interior mutability, however, is being able to modify fields with only a &-reference! The simple solution, and what I did, is just require users to mark any type that has interior mutable fields containing Gc<T> references, and take a write-lock instead of a read-lock if so, which guarantees the object is completely inaccessible even via &-reference while we visit it.

Samsara

Samsara is my library that provides a Gc<T> type which is a reference counted with cycle collection smartpointer. It is a concurrent garbage collector, with the potentially-unreachable list scanning happening on another thread (only via manual trigger, not automatically via heuristiscs for now). And, unlike every(?) other Rust garbage collector that I know of, it doesn’t have a global stop-the-world phase: it will occasionally block mutator threads if they try to acquire a read-write reference from a Gc object while the collector is scanning it, but that should be very short (since it only holds the read-lock while visting that object, and not the entire subgraph). It doesn’t interrupt threads via signals or anything for safepoints, either, unlike some other garbage collectors.

Right now it’s actually implemented with only safe Rust, plus some unsafe libraries (im for an immutable datastructure, and qcell for an ergonomic get/set API to try and prevent user deadlocks). It is, literally, just Rust’s Arc<T> behind the scenes, plus an RwLock<T> for the interior mutability, with the collector list holding Weak<T> references to objects that need to be scanned. Even if the collector incorrectly frees a set of objects that it thinks aren’t reachable any more, it would panic the user via an Option::unwrap instead of giving a use-after-free.

It isn’t the most efficient currently. When doing a cycle collection it will probably use a lot of memory, especially if every live Gc object is reachable from one of the objects on the list, since it creates a big HashMap of objects and adjacency list of edges. It also currently has the problem where objects are sent to the list, or removed from the list, via sending a message on a channel to the collector thread, which means that if you trigger a collection and then decrement a bunch of Gc objects before it finishes, it will block on the channel since it isn’t being drained while a collection happens. I think that the “list” could instead be maintained via an instrusive doubly-linked-list in the Gc<T> header, in order to allow the mutators to add or remove their objects from the list without needing the collector to do anything; unfortunately it would be hilariously unsafe and a lot of work to make sure it’s sound, and without a concrete usecase that is held back by the current design I probably won’t get around to trying that scheme out for a while.

I don’t claim to be doing anything novel here, for the record! For one, I’m obviously basing this off the paper from 2002, though doing things a little bit different. For another, there are several other Rust crates that also implement the paper! fitzgen’s bacon-rajan-cc and cactusref both are probably equally good if not better fits for anything you’d want to use samsara for - I mostly just nerdsniped myself and thought it would be neat to write this, and so I did.